The Hidden Algorithms Powering Your Coding Assistant

How Cursor and Windsurf Work Under the Hood

Imagine having an AI partner that helps you write code. Not just a tool that offers suggestions, but a true collaborator that understands what you're trying to build. This is happening now with tools like Cursor and Windsurf. But how do these AI coding assistants actually work? Let's explore the algorithms and systems that power them, using simple analogies to make the technical concepts easy to understand

How They See Your Code

To be helpful, AI coding assistants need to understand your entire codebase. Both Cursor and Windsurf use sophisticated context retrieval systems to "see" your code.

Cursor indexes your entire project into a vector store – think of it as creating a smart map of your code where similar concepts are grouped together. At indexing time, Cursor uses a dedicated encoder model that specially emphasizes comments and docstrings to better capture each file's purpose. When you ask a question, Cursor uses a two-stage retrieval process: first, it performs a vector search to find candidate code snippets, then it uses an AI model to re-rank these results by relevance. It's like having a librarian who first grabs all books on a topic, then carefully sorts through them to find exactly what you need. This two-stage approach significantly outperforms traditional keyword or regex searches, especially for non-trivial questions about code behavior.

You can also explicitly point Cursor to specific files using @file or @folder tags. This is like saying, "Look specifically at these chapters of the book." Files that are already open and code around your cursor are automatically added to the context.

Windsurf takes a similar approach with its Indexing Engine. It scans your entire repository to build a searchable map of your code. They've developed an LLM-based search tool that reportedly outperforms traditional embedding-based search for code, allowing the AI to better interpret your natural language queries and find relevant code snippets. When making suggestions, Windsurf considers both open files and automatically pulls in relevant files from elsewhere in your project. This "repo-wide awareness" means the AI understands your codebase as a connected system, not just isolated files.

Windsurf also offers "Context Pinning" – a way to keep crucial information (like design documents) always available to the AI. Think of this as putting important notes on a bulletin board that the AI can always see, no matter what else you're working on.

How They Think

The "thinking" of these AI assistants is guided by carefully designed prompts and context management strategies.

Cursor uses structured system prompts with special tags like <communication> and <tool_calling> to organize different types of information. The AI receives clear instructions about how to behave: avoid unnecessary apologies, explain what it's doing before taking actions, and never output code directly in chat (instead use proper editing tools). These instructions shape how the AI responds to you.

Cursor also uses a technique called in-context learning – showing the AI examples of the correct format for messages and tool calls within the prompt itself. This is like training a new employee by showing them examples of proper work.

Windsurf's "Cascade" agent uses AI Rules (custom instructions you can set) and Memories (persistent context across sessions). Memories are particularly interesting – they can be user-created (like notes about your project's APIs) or automatically generated from previous interactions. This means Windsurf can "remember" what it learned about your codebase over time, rather than starting fresh each session.

Both systems carefully manage their context window (the amount of text they can consider at once). They use strategies to compress information and prioritize what's most relevant to your current task.

How They Act

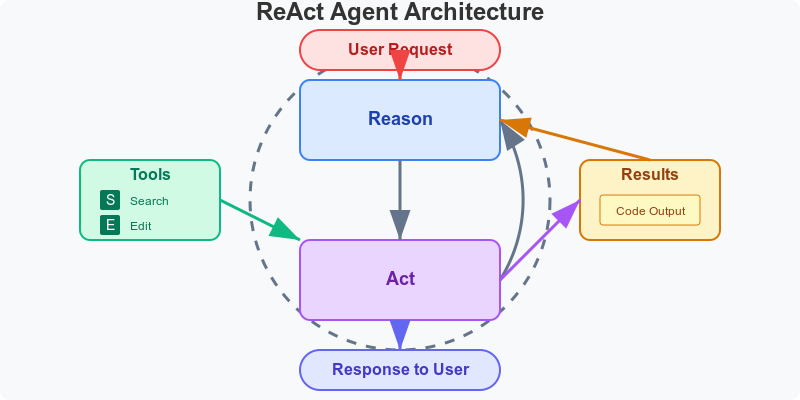

Both Cursor and Windsurf transform a simple language model into a multi-step coding agent using what's called a ReAct (Reason+Act) pattern.

Cursor's agent operates in a loop: the AI decides which tool to use, explains what it's doing, calls the tool, sees the result, and then decides on the next step. Available tools include searching the codebase, reading files, editing code, running shell commands, and even browsing the web for documentation.

A crucial optimization in Cursor is its "special diff syntax" for code edits. Instead of having the AI rewrite entire files, it only proposes semantic patches (the specific changes needed). A separate, faster "apply model" then handles merging these patches into the codebase. This is more efficient and reduces errors. Cursor also runs all experimental code in a protected sandbox environment, ensuring that the AI's experiments won't accidentally break your actual project.

For example, if you ask Cursor to "fix the authentication bug," it might first search your codebase for authentication-related files, then read those files to understand the issue, make edits to fix the bug, and finally run tests to verify the solution. Each step is clearly explained to you as it happens. Importantly, Cursor limits these self-correction loops (e.g., "DO NOT loop more than 3 times on fixing linter errors") to avoid infinite cycles.

Cursor even uses a "mixture-of-experts" approach – a powerful model (like GPT-4 or Claude) does the high-level reasoning, while specialized smaller models handle specific tasks like applying code changes. This is like having a senior architect make the important decisions while specialized contractors handle the detailed work.

Windsurf's Cascade works similarly but emphasizes its "AI Flows" concept. When you make a request, Cascade will generate a plan, make code changes, and ask for your approval before running code. If you approve, it can execute the code in an integrated AI Terminal, analyze the results, and propose fixes if there are errors.

Windsurf's agent architecture is particularly powerful - it can chain together up to 20 tool calls in a single flow without requiring user intervention. These tools include natural language code search, terminal commands, file editing, and MCP (Model Context Protocol) connectors to external services. This allows Cascade to handle complex, multi-step tasks like installing dependencies, configuring a project, and implementing new features in one cohesive sequence.

Impressively, Cascade notices when you manually change code during this process and adapts accordingly – if you modify a function parameter, it will automatically update all places where that function is called. This creates a tight feedback loop where you and the AI truly collaborate in real-time.

The Brains Inside

These systems use multiple AI models for different purposes, balancing quality with speed.

Cursor's model architecture uses what's known as an "Embed-Think-Do" agent loop. The system routes specific tasks to the most appropriate model based on the operation. For instance, Cursor leverages models with massive context windows (like Claude with 100k tokens) to handle entire project contexts and complex reasoning. This allows it to "see" much more of your codebase at once than earlier AI assistants could.

For embedding generation, Cursor likely uses specialized encoder models like OpenAI's text-embedding-ada. For code completion and editing, it dynamically selects between models based on the complexity of the task and user settings. The key innovation is this intelligent routing layer that determines when to use the heavyweight models versus the lightweight ones, optimizing for both quality and responsiveness.

Windsurf has invested in training its own code-specialized models based on Meta's Llama architecture. They offer a "Base Model" (70 billion parameters) for everyday coding tasks and a "Premier Model" (405 billion parameters) for the most complex challenges. Interestingly, Windsurf also allows users to choose external models like GPT-4 or Claude, making their system model-agnostic.

This model flexibility means Windsurf can match the right brain to the right task – using smaller models for quick suggestions and massive models for complex multi-file operations.

Staying In Sync

Real-time adaptation is crucial for a natural coding experience. Both systems implement sophisticated techniques to stay in sync with you.

Cursor streams the AI's response token-by-token, so you see code being written in real-time. If the AI's code introduces errors, Cursor will automatically detect this and attempt to fix it without user intervention – like a self-correcting loop where the AI debugs its own output.

Cursor also tracks your text cursor position to guide completions and even tries to predict where you might edit next - a feature aptly called "Cursor prediction." In the background, it continuously updates its vector index as files change, ensuring that new code becomes searchable almost immediately. This constant reindexing means the AI's knowledge of your codebase is never stale.

Windsurf emphasizes keeping you "in flow" with similar streaming features. Its standout capability is how the Cascade agent reacts to your edits in real-time – if you modify code during an AI Flow, Cascade notices and adjusts its plan accordingly.

This responsiveness is built on an event-driven architecture where specific user actions (like saving a file or changing text) trigger the AI to re-run its reasoning with the updated state. The system uses server-sent events (SSE) to maintain synchronization between the editor, terminal, and AI chat components.

Windsurf actively scans for issues as you work. If you run code and get an error, the assistant can immediately see that error and help resolve it without you needing to copy-paste anything. This creates an experience where the AI feels like an attentive partner watching your code, listening to your commands, and proactively adjusting its strategy.

Note

This analysis is based on public research and represents my understanding of these systems at the time of writing. Technical details may have evolved since publication.

This is good knowing how others have built their system. I agree sometimes you need smaller models to focus on specific tasks, like I have an JSON extraction agent that is built using GPT4.1 mini/nano that does the heavy lifting while O4-Mini or GPT 4.1 helps me in planning phase. Would love to get your feedback on my implementation - https://github.com/tysonthomas9/browser-operator-devtools-frontend

Insightful