Google’s Agent2Agent (A2A) Explained

Enabling AI Agents to Team Up and Speak a Common Language

Imagine walking into a bustling office where brilliant specialists work on complex projects. In one corner, a research analyst digs through data. Nearby, a design expert crafts visuals. At another desk, a logistics coordinator plans shipments. When these experts need to collaborate, they simply talk to each other - sharing information, asking questions, and combining their talents to solve problems no individual could tackle alone.

Now imagine if each expert was sealed in a soundproof booth, able to do their individual work brilliantly but completely unable to communicate with colleagues. The office's collective potential would collapse.

This is precisely the challenge facing today's AI agents. While individual AI systems grow increasingly capable at specialized tasks, they often can't effectively collaborate with each other. Enter Agent-to-Agent (A2A) - a communication framework that allows AI systems to work together like a well-coordinated team.

link to my GitHub repo that contains a code tutorial on A2A: Repo Link

Why AI Agents Need to Talk

Today's AI landscape resembles islands of expertise. One agent might excel at scheduling, another at data analysis, and another at creative writing. But these specialists typically operate in isolation, even when solving a problem would benefit from their combined abilities.

Consider a seemingly simple request: "Plan my business trip to Chicago next month." This actually requires multiple types of expertise:

Calendar management to find available dates

Travel knowledge to book appropriate flights and hotels

Budget awareness to make cost-effective choices

Location intelligence to schedule meetings efficiently

Sure, we could build one massive AI system that tries to do all of these things - a "super agent" that handles everything from calendar management to travel planning to budgeting. But this creates two major problems:

First, it's incredibly complex to build and maintain such a system. Every time you want to add a new capability, you have to integrate it into the core system, potentially destabilizing existing functions.

Second, and perhaps more importantly, it forces organizations to recreate capabilities that already exist elsewhere. Why should every company build their own flight booking AI when specialized travel booking agents already exist? Why rebuild calendar optimization when calendar specialists have perfected this capability?

A2A solves this by allowing your specialized agent to connect with other specialized agents built by different teams or companies. You focus on building what you do best, and simply connect to other experts for the rest. This modular approach means we can collectively solve more complex problems without each team needing to rebuild everything from scratch.

Without communication between agents, each specialized system can only handle its slice of the problem. The user must manually coordinate between them, becoming the human switchboard operator connecting isolated AI capabilities.

A Universal Language for AI

A2A creates a common language that any AI agent can use to talk with other agents, regardless of who built them or how they function internally. Think of it like establishing English or Mandarin as a universal language in an international workplace - once everyone speaks it, collaboration becomes possible.

What makes A2A powerful is that it defines not just how agents exchange information, but how they coordinate on tasks over time:

Introduction Protocol: Agents can discover each other's capabilities through "Agent Cards" - essentially digital résumés listing what each agent can do and how to reach them.

Task Management: Agents can assign work to each other and track progress. If the calendar agent needs flight options, it can send a formal request to the travel agent and monitor the status.

Rich Communication: Agents can exchange not just text, but also images, structured data, files, and other formats needed to collaborate effectively.

Clarification Mechanism: If an agent needs more information to complete a task, it can pause and request clarification - just as a human colleague might ask follow-up questions before proceeding.

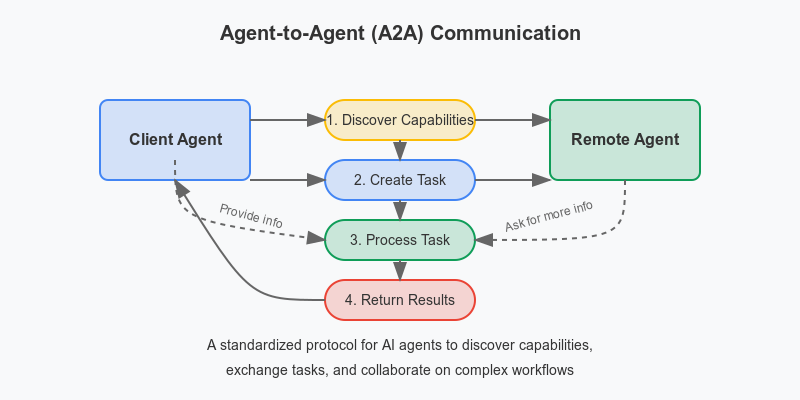

How A2A Works: Behind the Scenes

Let's imagine you ask your personal AI assistant: "Help me plan my daughter's birthday party next weekend."

Behind the scenes, your primary assistant (we'll call it Alex) recognizes this requires multiple expertise areas. Using A2A, here's how Alex might collaborate with specialist agents:

Alex checks its Agent Card directory and finds specialized agents for event planning, catering recommendations, and invitation design. Technically, Alex requests their Agent Cards (JSON files typically hosted at a standard URL like

https://agent-domain/.well-known/agent.json) that describe each agent's capabilities, endpoints, and required authentication.Alex creates separate tasks for each specialized agent by sending HTTP requests to their A2A endpoints:

To the event planner: "Need venue and activity ideas for an 8-year-old girl's birthday next Saturday afternoon."

To the catering advisor: "Seeking cake and food options for 12 children and 6 adults at a birthday party."

To the design agent: "Create fun invitation templates for a child's birthday party."

Each task gets assigned a unique ID and follows a specific lifecycle (submitted → working → completed/failed/input-required).

The event planner agent sets its task state to "input-required" and responds with a message containing a TextPart asking: "What's your budget range?" Alex either answers from what it knows about you or asks you directly before responding with another message to update the task.

The catering agent sends back a message containing a DataPart (structured JSON data) with menu options, prices and dietary information, which Alex can transform into a user-friendly display format. The task state is updated to "completed."

The design agent creates invitation templates as image files, packages them as FilePart objects within an Artifact, and marks its task as "completed." Alex can then present these templates as visual options.

Throughout this process, long-running tasks can provide real-time updates through Server-Sent Events (SSE), allowing agents to stream progress information. Authentication between agents happens using standard protocols like OAuth or API keys defined in the Agent Cards.

You simply interact with Alex, unaware of the multi-agent symphony orchestrating your request. The final result feels cohesive because A2A handled all the behind-the-scenes coordination.

How A2A Fits in the AI Ecosystem

A2A doesn't exist in isolation. It's part of a broader movement toward more capable and interoperable AI systems. Another important protocol in this space is the Model Context Protocol (MCP), which focuses on how individual agents use tools and context.

Think of it this way: if A2A is about helping AI agents talk to each other, MCP is about equipping individual agents with better tools and information. Using a workplace analogy, MCP gives each worker access to the right equipment and resources (their "toolbox"), while A2A establishes how workers communicate and collaborate on projects.

These protocols are complementary rather than competitive. An individual agent might use MCP to access tools and context it needs to perform its specialized function, then use A2A to coordinate with other agents on multi-part tasks. Together, they create an ecosystem where agents can both leverage tools effectively and engage with one another productively.

Both represent a shift in AI design philosophy - moving from isolated, do-everything models toward an ecosystem of specialized components that work together, much like human organizations evolved to include specialized roles that coordinate through standard processes.

The Technical Architecture of A2A

Beneath the friendly metaphors, A2A implements several technical components that make agent collaboration possible:

Client-Server Model: In any A2A interaction, one agent acts as the client (initiator) and the other as the server (responder). These roles are fluid and can switch depending on the context.

Agent Cards: These are JSON files that serve as capability manifests, typically hosted at a standardized endpoint (like

/.well-known/agent.json). They contain:The agent's capabilities and supported operations

Endpoint URLs for communication

Authentication requirements

Supported message formats and content types

Tasks with State Management: Each piece of work in A2A is encapsulated as a Task with:

Unique identifier

Lifecycle states (submitted, working, input-required, completed, failed, canceled)

Metadata including timestamps and ownership information

Message Structure: Communications consist of Messages containing one or more Parts:

TextPart: For plain text or formatted text content

DataPart: For structured data like JSON

FilePart: For binary data or references to files

Each part has a MIME type to indicate content format

Transport Protocol: A2A typically uses HTTP/HTTPS with:

Standard REST endpoints for task creation and updates

Server-Sent Events (SSE) for streaming updates on long-running tasks

Optional webhook support for asynchronous notifications

Security Layer: Authentication between agents uses enterprise-grade mechanisms:

OAuth 2.0 flows

API keys

JWT tokens

Access control permissions

This architecture means A2A can handle everything from quick question-answer exchanges to complex, long-running collaborative workflows that might take hours or days to complete.

The Whole Greater Than the Sum

The true power of A2A emerges when we stop thinking about individual AI capabilities and start envisioning networks of specialized expertise working together. Just as human civilization advanced dramatically when we developed specialization and trade, AI systems will make a similar leap when they can effectively collaborate.

This framework creates several advantages:

Modular Improvement: If a better calendar agent comes along, you can swap it into your AI ecosystem without disrupting other components.

Progressive Automation: Complex workflows that once required human coordination can increasingly run autonomously between agents.

Specialized Excellence: Rather than building one AI that's mediocre at everything, developers can create agents that truly excel in specific domains.

The future isn't a single all-powerful AI but rather a collaborative network of specialized agents working together - much like human organizations combine specialized roles into effective teams. A2A provides the communication infrastructure to make this collaboration possible, moving us closer to AI systems that can truly handle the complexity of real-world problems.

Looking Forward

As AI agents become more capable of working together through frameworks like A2A, we'll increasingly shift from commanding individual AI tools to delegating outcomes to teams of AI agents that coordinate among themselves. The user experience will become simpler while the capabilities grow more powerful - the hallmark of truly mature technology.

Like human colleagues brainstorming around a conference table, AI agents using A2A protocols can combine their unique perspectives and abilities to deliver solutions that no single agent could provide alone. That's the promise of A2A - not just smarter individual AIs, but smarter collaboration between them.

That's quite an interesting take. Would invite you to check this: https://medium.com/@psoumyadav/mcp-and-a2a-how-ai-agents-are-redefining-collaboration-and-automation-c866dcf420b6

Love this! I’m Harrison, an ex fine dining industry line cook. My stack "The Secret Ingredient" adapts hit restaurant recipes (mostly NYC and L.A.) for easy home cooking.

check us out:

https://thesecretingredient.substack.com